LLM easy buttons and context rot

On a AI tools + developer productivity study, the allure of easy buttons and context rot traps

In a recent study from the non-profit research institute METR, experienced open-source developers surprisingly were less productive (in terms of development speed) with AI tools. This probably feels like a strange result for anyone that has experienced the rush of a recent frontier model one-shotting a complex coding task. What is going on here?

The authors emphasize that the study does not conclude that AI tools do not improve developer productivity at all and that there are a number of factors that could've contributed to the slow down. Simon Willison published a blog post highlighting some of these factors along with his own intuition on what the primary contributing factor could be.

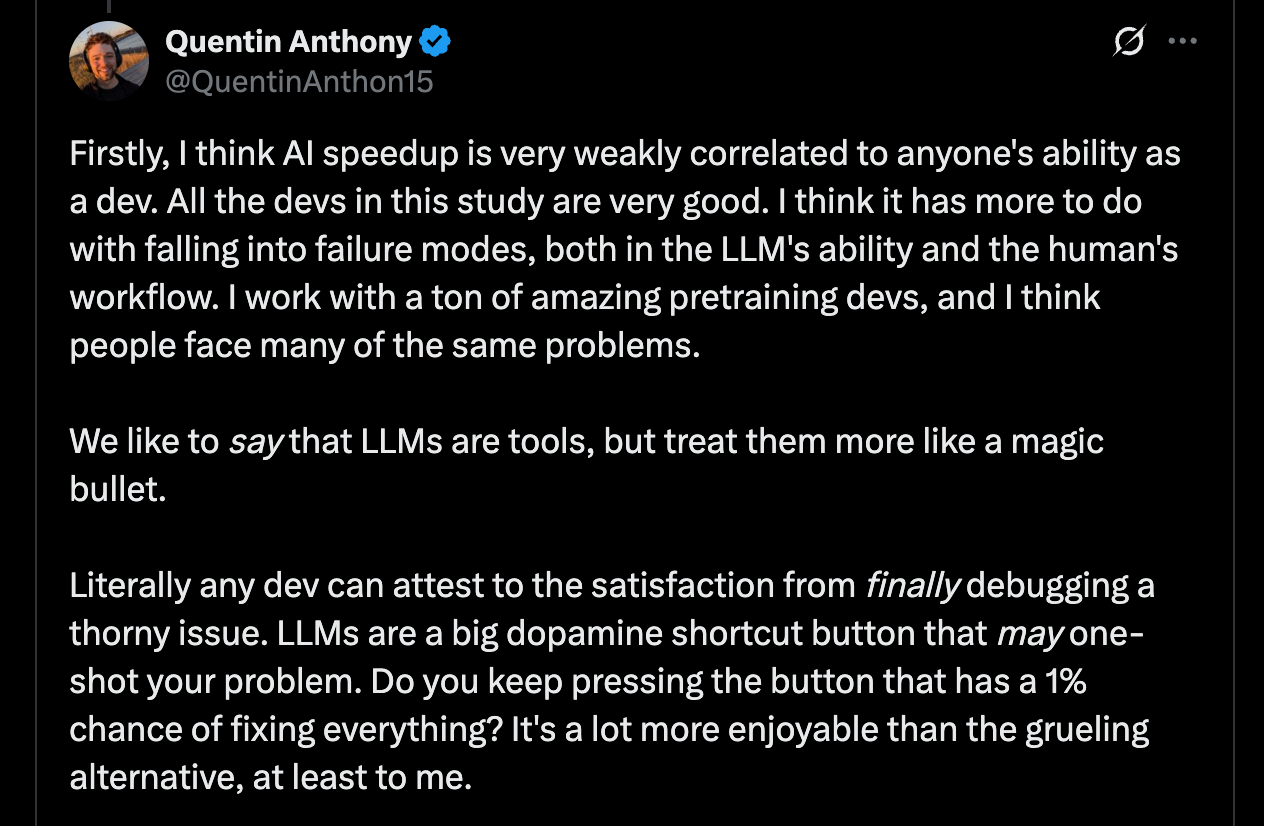

I thought the most interesting explanation came from Quentin Anthony (one of the participants of the study) which centered on human-AI failure modes stemming from both LLM shortcomings and human biases.

An LLM is kind of like the Staples easy button. The promise is that if you press it, your problem (at least a coding related one) will be solved. And every time it actually works, you get a hit of dopamine. The memory of the dopamine then increases the desire to press the button again for the next problem.

The issue is that in the case of LLMs the probability of the button actually working changes with each press. The first press to implement an API route in an Express/Typescript backend might have a 95% probability of success. The next press to implement parallelization in low level systems code might only have a 20% probability of success (perhaps due to less coverage in training data). And while we are certainly capable of thinking critically before each button press about the most effective way to solve the problem at hand, it is just feels so much more enjoyable and convenient to just press the button instead.

Another issue is that hidden underneath the button is a machine that is capable of amazing feats of intelligence, but still susceptible to distraction (similar to their human counterparts). In the best case, you press the button once and the machine solves your problem. In the worst case, you press the button multiple times, each time thinking "it's so close, just one more time and it'll get it right", each time giving the machine more context about the problem and its environment, when in reality the machine is spinning in circles with its attention being pulled in too many directions that seem promising, but that are ultimately just distractions. The machine falls victim to context rot.

The allure of the easy button can make it difficult to escape the context rot trap. You could look past the button and try to modify the context given to the machine or even reset it so the machine is less likely to get confused. Or you could just press the button again.

This dynamic is not exclusive to how we use LLMs. We see these human behavioral patterns in lots of places. It is cognitively easier to give a LLM more context in one more prompt to try to fix a problem than it is to carefully edit/remove context or start a fresh chat. It is cognitively easier to give a direct report one more instruction to fix their work than it is carefully review and correct inaccuracies in their understanding of the task. It is cognitively easier to try to tweak a rough code prototype to deploy a system to production than it is to discard the prototype and carefully build the system from solid first principles. It feels like we are just one prompt, one instruction, one tweak away. And the more we chase the dopamine that could be on the other side of that prompt, instruction or tweak, the more context rot that can accumulate.

As long as the probability of task failure for a LLM is non-zero and the context window length that a LLM can effectively reason over (note that this value is not only typically different than the context window length, but also lower) is finite, I suspect we will need to get used to thinking not just about what context to add, but also what context to modify and subtract. This increases friction and isn't as easy as just continuing to press the button (typing in the next prompt). But, this might also be how we escape the context rot trap.